How to fine-tune pfSense for 1Gbit throughput on APU2/APU3/APU4

Update 2023-02-22 Added information about pfSense 2.6.0 - no change from 2.5.0

Update 2021-02-20 Added information about pfSense 2.5.0

Update 2020-10-28: Added a note about slow PPPoE handling.

(outdated) Update 2020-07-19: This article has been updated for pfSense 2.4.5-p1. It's still possible to get 1Gbit on pfSense 2.4.5 with APU2, APU3 and APU4.

(outdated) Update 2019-01-15: This article has been updated for pfSense 2.4.4. It's now possible to get full gigabit throughput when utilizing multiple NIC queues.

Note from the author

This article was originally written for pfSense 2.3, then it was updated for pfSense 2.4.4, then for pfSense 2.4.5-p1, and now for pfSense 2.5.0.

There were many changes in pfSense over the last several years, and each version needed different tweaks to get a gigabit performance on APU hardware. Fortunately, pfSense 2.5.0 does not need any special tweaks. It performs well right after installation.

The instructions below are now updated to work on pfSense 2.5.0. I don't guarantee that these instructions will work in the future releases, but I'll do my best to update this article every time something changes.

pfSense 2.6.0 behaves the same as 2.5.0.

Background information

APU2, APU3 and APU4 motherboards have four 1Ghz CPU cores, pfSense by default uses only 1 core per connection. This limitation still exists, however, a single-core performance has considerably improved.

APU2E4 have a performant Intel I210-AT Network Interfaces. These NICs have 4 transmit and four receive queues, being able to work simultaneously on 4 connections. Prior to pfSense 2.5.0 some fine-tuning was necessary for pfSense to take advantage of multiple NIC queues, and route at 1Gbit when using more than one connection. pfSense 2.5.0 does not need these tweaks. It uses multiple queues by default.

The other APU boards (APU2C0, APU2C2, APU3, APU4) have I211-AT Network Interface, with 2 transmit/receive queues. This is less performant NIC, but it's still good enough to deliver 1Gbit on pfSense when more one than one connection is used. Note: the intel PDF specification for I211-AT has a mistake - it states that there are 4 queues while there are only 2.

Routers rarely open just one connection, so a single connecton is rarely a bottleneck in the real world. Web browser opens about 8 TCP connections per website, Torrent clients open hundreds of connections, Netflix opens multiple TCP connections when streaming video, etc.

Single connection performance

Interestingly, pfSense 2.5.0 can route only about 590Mbit/s on a single cpu core. There was some degradation of performance in 2.5.0. If I find a way to tweak it in the future, I'll update this article.

| Operating system | Single Connection | Multiple Connections |

| pfSense 2.4.5 (with tweaks) | 750 Mbit/s | 1 Gbit/s |

| pfSense 2.5.0 (no tweaks required) | 590 Mbit/s | 1 Gbit/s |

| OpenWRT | 1 Gbit/s | 1 Gbit/s |

Gigabit config for pfSense 2.5.0

No tweaks are required! Don't follow any of the information listed below for pfSense 2.4.5.

pfSense 2.5.0 is able to utilize multiple NIC queues by default, and therefore no tweaks are necessary.

Gigabit config for pfSense 2.4.5

Note, the instructions below should NOT be applied for pfSense 2.5.0. I'm leaving these information here for historical reasons.

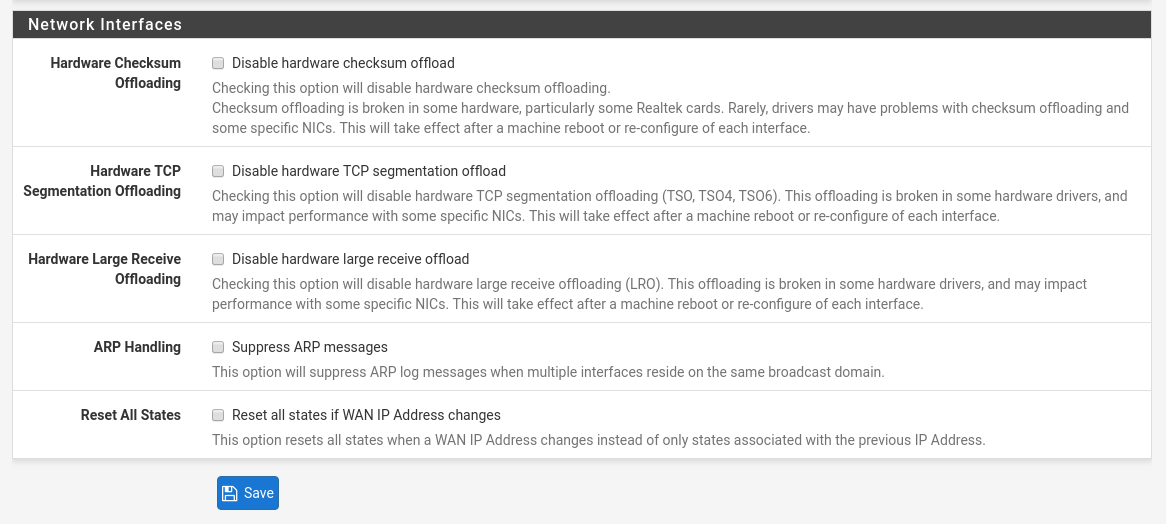

First, head to the pfSense Web panel -> System -> Advanced -> Networking -> Scroll to the bottom.

Make sure that all 3 first checkboxes under "Network Interfaces" are unchecked.

- Hardware Checksum Offloading

- Hardware TCP Segmentation Offloading

- Hardware Large Receive Offloading

Like shown on the screenshot:

Note, some users say that TSO and LRO should be disabled, and enabling these settings may actually decrease performance. This is not what we see in our tests. If you have specific information about the conditions under which this is true, please send us an email.

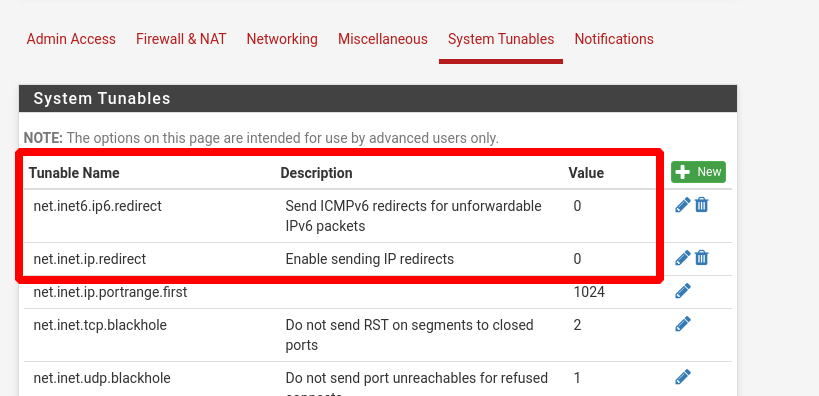

Now go to Web panel -> System -> Advanced -> SystemTunables.

Find the following two tunables and set them to 0.

net.inet6.ip6.redirect=0 net.inet.ip.redirect=0

See screenshot below

These settings are the change between 2.4.4 and 2.4.5. Background for these settings: https://redmine.pfsense.org/issues/10465

Now we need to edit some settings from the shell. You can SSH to the box or connect with the serial cable.

To get the full gigabit, edit /boot/loader.conf.local (you may need to create it if it doesn't exist) and insert the following settings:

# agree with Intel license terms legal.intel_igb.license_ack="1" # this is the magic. If you don't set this, queues won't be utilized properly # allow multiple processes to processing incoming traffic hw.igb.rx_process_limit="-1" hw.igb.tx_process_limit="-1"

After saving this file, reboot your router to apply it.

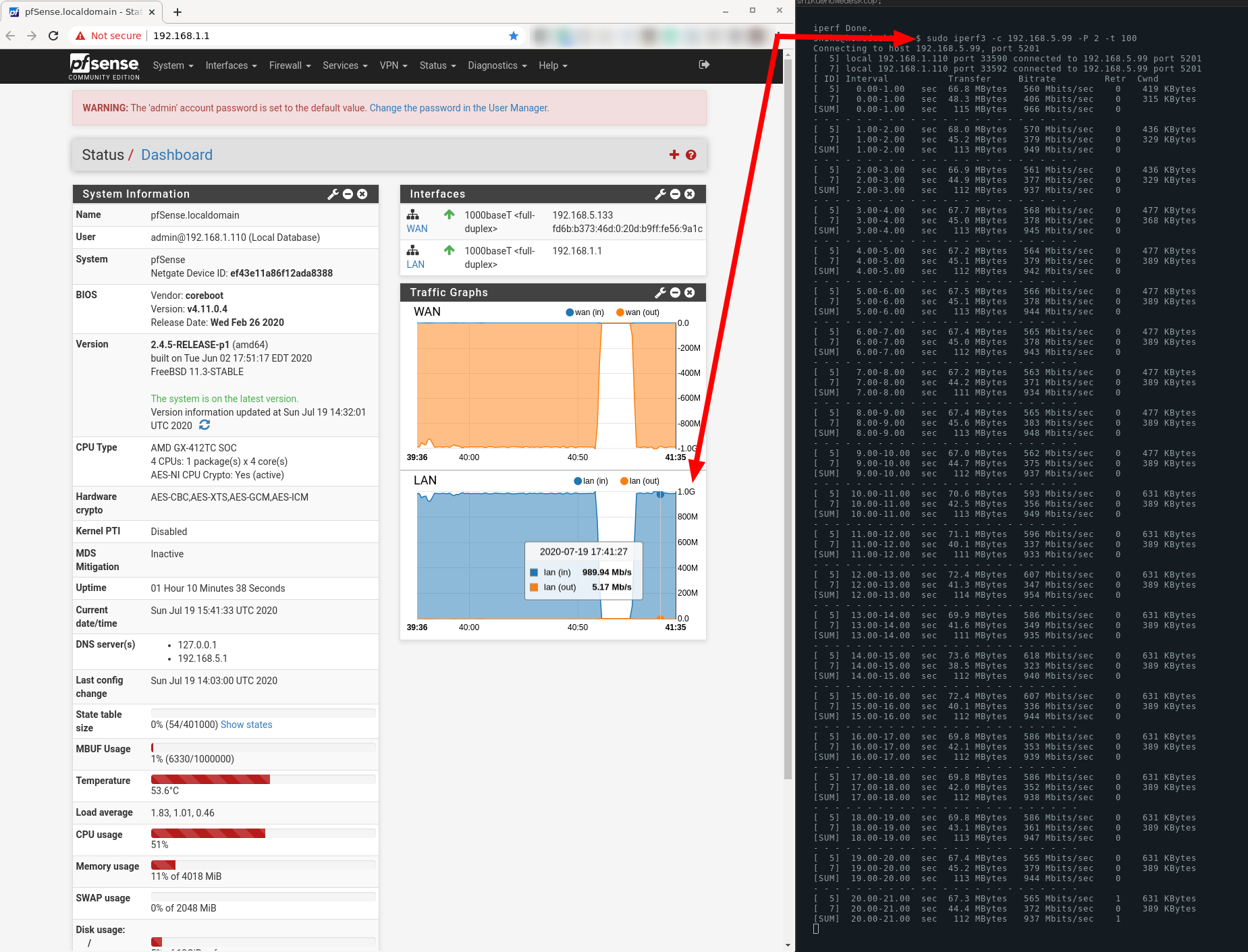

Now you can run some tests to verify that your settings worked properly. The easiest way is to use iperf3 with multiple connections, where one device is on the LAN and the other one on the internet.

iperf3 APU4 throughput test

We set up one iperf3 server on the internet, and called it from a host on the LAN.

On the server (somewhere on the internet) run the following command

iperf3 -s

On your LAN run this command:

iperf3 -c SERVER_IP_HERE -P 4

If everything went well, you should be seeing about 940Mbit/s throughput, similar to the snippet below:

- - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 43.00-44.00 sec 56.1 MBytes 470 Mbits/sec 0 481 KBytes [ 7] 43.00-44.00 sec 55.7 MBytes 468 Mbits/sec 0 438 KBytes [SUM] 43.00-44.00 sec 112 MBytes 938 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 44.00-45.00 sec 56.4 MBytes 473 Mbits/sec 0 481 KBytes [ 7] 44.00-45.00 sec 56.1 MBytes 470 Mbits/sec 0 438 KBytes [SUM] 44.00-45.00 sec 112 MBytes 943 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 45.00-46.00 sec 56.1 MBytes 470 Mbits/sec 0 481 KBytes [ 7] 45.00-46.00 sec 55.6 MBytes 466 Mbits/sec 0 438 KBytes [SUM] 45.00-46.00 sec 112 MBytes 936 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 46.00-47.00 sec 57.7 MBytes 484 Mbits/sec 0 481 KBytes [ 7] 46.00-47.00 sec 55.0 MBytes 461 Mbits/sec 0 438 KBytes [SUM] 46.00-47.00 sec 113 MBytes 945 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 47.00-48.00 sec 55.2 MBytes 463 Mbits/sec 0 481 KBytes [ 7] 47.00-48.00 sec 55.8 MBytes 468 Mbits/sec 0 438 KBytes [SUM] 47.00-48.00 sec 111 MBytes 931 Mbits/sec 0

Here's a screenshot from pfSense panel - take a look at the traffic graph.

I think this is quite neat. It's possible to get full gigabit on pfSense when utilizing multiple NIC queues and multiple CPUs!

PPPoE connection is slow on pfSense and OPNsense

Note, the above tweaks won't deliver full gigabit if your ISP is using a PPPoE authentication.

If you don't know what PPPoE is, this problem likely doesn't affect you. It's an older technology that is rarely used by internet providers.

PPPoE connection type cannot use Receive Side Scaling load balancing, and the Intel i210 / 211 NIC multi-queue feature cannot be used (the NIC will use only queue 0, and not the others), because all the WAN traffic will be encapsulated into one stream, and by definition that cannot be load-balanced into multiple receive queues.

Only 1 CPU core will receive the full PPPoE network traffic, regardless of how many different flows are inside the encapsulated stream. Because a single core running at 1.0-1.4Ghz is insufficient to process the full Gigabit network traffic, you will never be able to reach 1 Gbit routing traffic using an APU2/3/4 board with BSD operating system. 1 CPU core is 100% utilised, while the other 3 CPU cores are IDLE.

APU2/3/4 is therefore not recommended for full gigabit over PPPoE with BSD (pfSense / OPNsense).

There are, however, a few settings that can slightly increase the throughput.

The numbers quoted below are for pfSense 2.4.5-p1. On pfSense 2.5.0 performance is lower (I don't have the exact numbers).

Without any tweaks, APU2 can deliver about 340Mbps with PPPoE.

With net.isr.dispatch=deferred APU delivers about 420Mbps.

If you add net.inet.ip.intr_queue_maxlen=3000 the throughput goes up to about 450Mbps.

If you happen to have PPPoE you can try these settings:

net.isr.dispatch=deferred net.inet.ip.intr_queue_maxlen=3000

You can also consider using OpenWRT, which easily reaches 1Gbit/s with PPPoE.

if you have any questions about the above article, ping us at info@teklager.se