pfSense 2.4.4 throughput benchmark for APU2, bios v4.9.0.2

In 2017, we have published a throughput test for pfSense 2.3.3 on APU2C0 that showed a maximum throughput of about 620Mbit/s.

Things have changed. pfSense released several OS updates, we are now on 2.4.4, and PC Engines released several BIOS updates for APU. The latest BIOS v4.9.0.2 is supposed to enable CPU boost to 1.4Ghz.

Let's see if we get better performance today (February 2019).

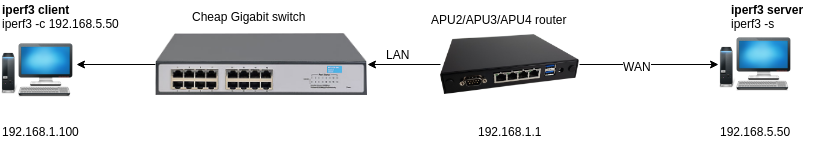

All tests were performed on APU2C2, but the same results will be achieved using any other APU2, APU3 or APU4 router.

Network topolog for the test

Router specification:

- APU2C2 (2GB RAM)

- pfSense 2.4.4

- powerd enabled, and disabled (doesn't seem to make a difference)

- router configured to use multiple NIC queues, and route on multiple CPU cores, as described here.

Test 1: one TCP connection

The first test is using a single TCP connection (-P 1). This forces all traffic to be routed and processed by a single CPU core.

Old bios v4.0.23

root@homedesktop:/home/sniku# iperf3 -c 192.168.5.50 -P 1 Connecting to host 192.168.5.50, port 5201 [ 5] local 192.168.1.111 port 50932 connected to 192.168.5.50 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 94.1 MBytes 790 Mbits/sec 0 3.07 MBytes [ 5] 1.00-2.00 sec 90.0 MBytes 755 Mbits/sec 0 3.07 MBytes [ 5] 2.00-3.00 sec 91.2 MBytes 765 Mbits/sec 0 3.07 MBytes [ 5] 3.00-4.00 sec 90.0 MBytes 755 Mbits/sec 0 3.07 MBytes [ 5] 4.00-5.00 sec 90.0 MBytes 754 Mbits/sec 0 3.07 MBytes [ 5] 5.00-6.00 sec 88.8 MBytes 746 Mbits/sec 0 3.07 MBytes [ 5] 6.00-7.00 sec 90.0 MBytes 755 Mbits/sec 0 3.07 MBytes [ 5] 7.00-8.00 sec 88.8 MBytes 744 Mbits/sec 0 3.07 MBytes [ 5] 8.00-9.00 sec 88.8 MBytes 744 Mbits/sec 0 3.07 MBytes [ 5] 9.00-10.00 sec 88.8 MBytes 744 Mbits/sec 0 3.07 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 900 MBytes 755 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 899 MBytes 754 Mbits/sec receiver iperf Done.

I have executed 10 runs, all results were very similar. 771, 755, 763, 756, 763, 755, 761, 758, 767.

This is a much better result in comparison to the test made in 2017, where the throughput was limited to about 620Mbit/s.

As can be seen above, the throughput is very consistent during the test as well. It varies between 755Mbit and 771Mbit.

Throughput is very consistent.

New bios v4.9.0.2

root@homedesktop:/home/sniku# iperf3 -c 192.168.5.50 -P 1 Connecting to host 192.168.5.50, port 5201 [ 5] local 192.168.1.111 port 51070 connected to 192.168.5.50 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 106 MBytes 890 Mbits/sec 0 2.99 MBytes [ 5] 1.00-2.00 sec 102 MBytes 860 Mbits/sec 0 2.99 MBytes [ 5] 2.00-3.00 sec 104 MBytes 870 Mbits/sec 0 2.99 MBytes [ 5] 3.00-4.00 sec 101 MBytes 849 Mbits/sec 0 2.99 MBytes [ 5] 4.00-5.00 sec 102 MBytes 860 Mbits/sec 0 2.99 MBytes [ 5] 5.00-6.00 sec 98.8 MBytes 828 Mbits/sec 0 2.99 MBytes [ 5] 6.00-7.00 sec 96.2 MBytes 807 Mbits/sec 0 3.14 MBytes [ 5] 7.00-8.00 sec 96.2 MBytes 807 Mbits/sec 0 3.14 MBytes [ 5] 8.00-9.00 sec 97.5 MBytes 818 Mbits/sec 0 3.14 MBytes [ 5] 9.00-10.00 sec 100 MBytes 839 Mbits/sec 0 3.14 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1005 MBytes 843 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1004 MBytes 843 Mbits/sec receiver iperf Done.

I have executed 10 runs, results were 843, 797, 807, 799, 795, 785, 819, 816, 795, 835, 785, 774.

As shown, throughput is higher, but there's a quite much variation. On my 10 tests it varies between 774Mbit and 843Mbit. It also varies during a single run, indicating that CPU boost may be turning on and off. Nevertheless, it's a nice improvement!

Throught is higher, but less consistent.

Test 2: two TCP connections

This test is similar to the first one, but we are using 2 TCP connections (-P 2). This allows the traffic to be processed by 2 CPU cores.

Old BIOS v4.0.23

root@homedesktop:/home/sniku# iperf3 -c 192.168.5.50 -P 2 Connecting to host 192.168.5.50, port 5201 [ 5] local 192.168.1.111 port 50880 connected to 192.168.5.50 port 5201 [ 7] local 192.168.1.111 port 50882 connected to 192.168.5.50 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 71.5 MBytes 600 Mbits/sec 2 407 KBytes [ 7] 0.00-1.00 sec 43.3 MBytes 364 Mbits/sec 6 228 KBytes [SUM] 0.00-1.00 sec 115 MBytes 964 Mbits/sec 8 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.00-2.00 sec 67.5 MBytes 566 Mbits/sec 1 417 KBytes [ 7] 1.00-2.00 sec 45.1 MBytes 378 Mbits/sec 0 311 KBytes [SUM] 1.00-2.00 sec 113 MBytes 944 Mbits/sec 1 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.00-3.00 sec 67.6 MBytes 567 Mbits/sec 0 419 KBytes [ 7] 2.00-3.00 sec 44.7 MBytes 375 Mbits/sec 0 314 KBytes [SUM] 2.00-3.00 sec 112 MBytes 942 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.00-4.00 sec 67.4 MBytes 565 Mbits/sec 0 420 KBytes [ 7] 3.00-4.00 sec 45.1 MBytes 378 Mbits/sec 0 314 KBytes [SUM] 3.00-4.00 sec 112 MBytes 944 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 4.00-5.00 sec 66.7 MBytes 559 Mbits/sec 0 423 KBytes [ 7] 4.00-5.00 sec 44.4 MBytes 373 Mbits/sec 0 317 KBytes [SUM] 4.00-5.00 sec 111 MBytes 932 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 5.00-6.00 sec 67.7 MBytes 568 Mbits/sec 1 423 KBytes [ 7] 5.00-6.00 sec 45.4 MBytes 381 Mbits/sec 0 318 KBytes [SUM] 5.00-6.00 sec 113 MBytes 949 Mbits/sec 1 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 6.00-7.00 sec 67.7 MBytes 568 Mbits/sec 0 423 KBytes [ 7] 6.00-7.00 sec 44.3 MBytes 372 Mbits/sec 0 318 KBytes [SUM] 6.00-7.00 sec 112 MBytes 939 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 7.00-8.00 sec 67.0 MBytes 562 Mbits/sec 0 424 KBytes [ 7] 7.00-8.00 sec 45.3 MBytes 380 Mbits/sec 0 318 KBytes [SUM] 7.00-8.00 sec 112 MBytes 942 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 8.00-9.00 sec 67.2 MBytes 564 Mbits/sec 0 424 KBytes [ 7] 8.00-9.00 sec 44.7 MBytes 375 Mbits/sec 0 318 KBytes [SUM] 8.00-9.00 sec 112 MBytes 938 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 9.00-10.00 sec 56.7 MBytes 476 Mbits/sec 0 424 KBytes [ 7] 9.00-10.00 sec 56.1 MBytes 470 Mbits/sec 0 406 KBytes [SUM] 9.00-10.00 sec 113 MBytes 946 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 667 MBytes 559 Mbits/sec 4 sender [ 5] 0.00-10.00 sec 665 MBytes 558 Mbits/sec receiver [ 7] 0.00-10.00 sec 458 MBytes 385 Mbits/sec 6 sender [ 7] 0.00-10.00 sec 457 MBytes 383 Mbits/sec receiver [SUM] 0.00-10.00 sec 1.10 GBytes 944 Mbits/sec 10 sender [SUM] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver iperf Done.

I have executed 10 runs, results were: 942, 944, 944, 944, 764, 944, 944, 770, 769, 944.

Sometimes both connections get assigned to the same CPU core, so the processing is limited to 1 core. This results in a throughput of about ~760Mbit, just like in Test 1.

Throughput is 1Gbit, when using more than 1 connection, most of the time.

New bios v4.9.0.2

root@homedesktop:/home/sniku# iperf3 -c 192.168.5.50 -P 2 Connecting to host 192.168.5.50, port 5201 [ 5] local 192.168.1.112 port 56446 connected to 192.168.5.50 port 5201 [ 7] local 192.168.1.112 port 56448 connected to 192.168.5.50 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 67.7 MBytes 568 Mbits/sec 0 434 KBytes [ 7] 0.00-1.00 sec 47.4 MBytes 398 Mbits/sec 0 325 KBytes [SUM] 0.00-1.00 sec 115 MBytes 965 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.00-2.00 sec 67.7 MBytes 568 Mbits/sec 0 434 KBytes [ 7] 1.00-2.00 sec 44.8 MBytes 376 Mbits/sec 0 325 KBytes [SUM] 1.00-2.00 sec 113 MBytes 944 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.00-3.00 sec 67.6 MBytes 567 Mbits/sec 0 434 KBytes [ 7] 2.00-3.00 sec 44.7 MBytes 375 Mbits/sec 0 325 KBytes [SUM] 2.00-3.00 sec 112 MBytes 942 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.00-4.00 sec 66.6 MBytes 558 Mbits/sec 0 434 KBytes [ 7] 3.00-4.00 sec 44.9 MBytes 377 Mbits/sec 0 325 KBytes [SUM] 3.00-4.00 sec 111 MBytes 935 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 4.00-5.00 sec 67.8 MBytes 569 Mbits/sec 0 434 KBytes [ 7] 4.00-5.00 sec 44.8 MBytes 376 Mbits/sec 0 325 KBytes [SUM] 4.00-5.00 sec 113 MBytes 945 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 5.00-6.00 sec 66.6 MBytes 559 Mbits/sec 0 434 KBytes [ 7] 5.00-6.00 sec 44.8 MBytes 376 Mbits/sec 0 325 KBytes [SUM] 5.00-6.00 sec 111 MBytes 935 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 6.00-7.00 sec 67.6 MBytes 567 Mbits/sec 0 434 KBytes [ 7] 6.00-7.00 sec 44.9 MBytes 376 Mbits/sec 0 325 KBytes [SUM] 6.00-7.00 sec 112 MBytes 943 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 7.00-8.00 sec 67.5 MBytes 567 Mbits/sec 0 434 KBytes [ 7] 7.00-8.00 sec 44.7 MBytes 375 Mbits/sec 0 325 KBytes [SUM] 7.00-8.00 sec 112 MBytes 942 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 8.00-9.00 sec 67.4 MBytes 566 Mbits/sec 0 434 KBytes [ 7] 8.00-9.00 sec 45.1 MBytes 378 Mbits/sec 0 325 KBytes [SUM] 8.00-9.00 sec 113 MBytes 944 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 9.00-10.00 sec 66.9 MBytes 561 Mbits/sec 0 434 KBytes [ 7] 9.00-10.00 sec 45.1 MBytes 378 Mbits/sec 0 325 KBytes [SUM] 9.00-10.00 sec 112 MBytes 940 Mbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 674 MBytes 565 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 672 MBytes 564 Mbits/sec receiver [ 7] 0.00-10.00 sec 451 MBytes 379 Mbits/sec 0 sender [ 7] 0.00-10.00 sec 450 MBytes 377 Mbits/sec receiver [SUM] 0.00-10.00 sec 1.10 GBytes 944 Mbits/sec 0 sender [SUM] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver iperf Done.

I have executed 10 runs, results were all at ~944Mbit/s.

In my previous tests I have experienced the same problem of assigning both connections to 1 core, like in the result to the left, but it did not happen this time. It looks like the new BIOS mitigates this problem to some degree, or perhaps it's just a coincidence.

Throughput is 1Gbit, when using more than 1 connection.

Test 3: fourty TCP connections

This test is similar to the first one, but we are using 40 TCP connections (-P 40). This allows the traffic to be evenly distributed between 4 CPU cores

Old BIOS v4.0.23

I have executed 10 runs, results were always 944Mbit/s.

Throughput is 1Gbit, when using more than 40 connections.

New bios v4.9.0.2

I have executed 10 runs, results were always 944Mbit/s.

Throughput is 1Gbit, when using more than 40 connections.

kernel sometimes assigns processing of 2 heavy tasks to the same CPU core

I don't know too much about CPU scheduling in BSD, but it looks like sometimes it's not ideal. In a few cases kernel schedules processing of multiple connections to the same CPU core, even when 3 other cores are not doing anything. This results in throughput limited to about 760Mbit/s.

When this happens, one can execute "top -P CC" to see what is going on. Here's how it looks like when 1 CPU is processing 2 TCP connections.

last pid: 59123; load averages: 1.16, 0.85, 0.60 up 0+00:15:26 20:17:26 50 processes: 1 running, 49 sleeping CPU 0: 0.0% user, 0.0% nice, 0.8% system, 13.8% interrupt, 85.4% idle CPU 1: 0.0% user, 0.0% nice, 0.4% system, 14.2% interrupt, 85.4% idle CPU 2: 0.0% user, 0.0% nice, 1.2% system, 5.5% interrupt, 93.3% idle CPU 3: 0.0% user, 0.0% nice, 0.0% system, 100% interrupt, 0.0% idle Mem: 66M Active, 46M Inact, 440M Wired, 228K Buf, 1270M Free ARC: 84M Total, 18M MFU, 64M MRU, 168K Anon, 357K Header, 1507K Other 37M Compressed, 93M Uncompressed, 2.49:1 Ratio Swap: 2048M Total, 2048M Free

When things go as planned, 2 CPU cores are sharing the load, and we get full gigabit throughput.

last pid: 82545; load averages: 1.25, 0.88, 0.41 up 0+00:05:17 20:28:48 50 processes: 1 running, 49 sleeping CPU 0: 0.0% user, 0.0% nice, 0.0% system, 31.4% interrupt, 68.6% idle CPU 1: 0.4% user, 0.0% nice, 1.6% system, 0.0% interrupt, 98.0% idle CPU 2: 0.0% user, 0.0% nice, 0.8% system, 76.5% interrupt, 22.7% idle CPU 3: 0.0% user, 0.0% nice, 0.8% system, 51.4% interrupt, 47.8% idle Mem: 65M Active, 46M Inact, 433M Wired, 264K Buf, 1277M Free ARC: 84M Total, 18M MFU, 65M MRU, 168K Anon, 356K Header, 1506K Other 37M Compressed, 92M Uncompressed, 2.47:1 Ratio Swap: 2048M Total, 2048M Free

This happens rarily, so in practice it won't be a problem for most users. Still, it's interesting to learn something new :-)

Data

If you want to analyze the data yourself, see raw data files from all testruns here

CPU stress test benchmark

While we are at it, let's stress-test CPU and see what the CPU-only improvement is.

Old BIOS v4.0.23

Single core CPU stress test:

root@debian:~# sysbench --test=cpu --cpu-max-prime=20000 run --num-threads=1

sysbench 0.4.12: multi-threaded system evaluation benchmark

Running the test with following options:

Number of threads: 1

Doing CPU performance benchmark

Threads started!

Done.

Maximum prime number checked in CPU test: 20000

Test execution summary:

total time: 72.9001s

total number of events: 10000

total time taken by event execution: 72.8936

per-request statistics:

min: 6.62ms

avg: 7.29ms

max: 9.20ms

approx. 95 percentile: 7.59ms

Threads fairness:

events (avg/stddev): 10000.0000/0.00

execution time (avg/stddev): 72.8936/0.00

Single CPU core takes close to 73 seconds to calculate 20000 prime numbers.

New bios v4.9.0.2

Single core CPU stress test

root@debian:~# sysbench --test=cpu --cpu-max-prime=20000 run --num-threads=1

sysbench 0.4.12: multi-threaded system evaluation benchmark

Running the test with following options:

Number of threads: 1

Doing CPU performance benchmark

Threads started!

Done.

Maximum prime number checked in CPU test: 20000

Test execution summary:

total time: 54.6983s

total number of events: 10000

total time taken by event execution: 54.6941

per-request statistics:

min: 5.44ms

avg: 5.47ms

max: 8.00ms

approx. 95 percentile: 5.48ms

Threads fairness:

events (avg/stddev): 10000.0000/0.00

execution time (avg/stddev): 54.6941/0.00

Single CPU core takes close to 55 seconds to calculate 20000 prime numbers.

Wow, the difference here is very significant. 25% improvement!

Crypto benchmark. Will VPN also be faster on the new BIOS?

Many customers are using OpenVPN on the APU boxes. OpenVPN is always using one core for encryption/decryption, so let's see if new BIOS improves the VPN speed.

All these crypto tests have been executed on Debian. More tests are needed on pfSense/BSD platform.

Old BIOS v4.0.23

aes-128-cbc speed test

root@debian:~# openssl speed -elapsed -evp aes-128-cbc You have chosen to measure elapsed time instead of user CPU time. Doing aes-128-cbc for 3s on 16 size blocks: 14383771 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 64 size blocks: 6768663 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 256 size blocks: 2212471 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 1024 size blocks: 622353 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 8192 size blocks: 80451 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 16384 size blocks: 39933 aes-128-cbc's in 3.00s OpenSSL 1.1.0f 25 May 2017 built on: reproducible build, date unspecified options:bn(64,64) rc4(8x,int) des(int) aes(partial) blowfish(ptr) compiler: gcc -DDSO_DLFCN -DHAVE_DLFCN_H -DNDEBUG -DOPENSSL_THREADS -DOPENSSL_NO_STATIC_ENGINE -DOPENSSL_PIC -DOPENSSL_IA32_SSE2 -DOPENSSL_BN_ASM_MONT -DOPENSSL_BN_ASM_MONT5 -DOPENSSL_BN_ASM_GF2m -DSHA1_ASM -DSHA256_ASM -DSHA512_ASM -DRC4_ASM -DMD5_ASM -DAES_ASM -DVPAES_ASM -DBSAES_ASM -DGHASH_ASM -DECP_NISTZ256_ASM -DPADLOCK_ASM -DPOLY1305_ASM -DOPENSSLDIR="\"/usr/lib/ssl\"" -DENGINESDIR="\"/usr/lib/x86_64-linux-gnu/engines-1.1\"" The 'numbers' are in 1000s of bytes per second processed. type 16 bytes 64 bytes 256 bytes 1024 bytes 8192 bytes 16384 bytes aes-128-cbc 76713.45k 144398.14k 188797.53k 212429.82k 219684.86k 218087.42k

This test is performed on debian 9. it will have to be repeated on pfSense/BSD.

aes-256-cbc speed test

root@debian:~# openssl speed -elapsed -evp aes-256-cbc You have chosen to measure elapsed time instead of user CPU time. Doing aes-256-cbc for 3s on 16 size blocks: 12723922 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 64 size blocks: 5423190 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 256 size blocks: 1677103 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 1024 size blocks: 457506 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 8192 size blocks: 58599 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 16384 size blocks: 29178 aes-256-cbc's in 3.00s OpenSSL 1.1.0f 25 May 2017 built on: reproducible build, date unspecified options:bn(64,64) rc4(8x,int) des(int) aes(partial) blowfish(ptr) compiler: gcc -DDSO_DLFCN -DHAVE_DLFCN_H -DNDEBUG -DOPENSSL_THREADS -DOPENSSL_NO_STATIC_ENGINE -DOPENSSL_PIC -DOPENSSL_IA32_SSE2 -DOPENSSL_BN_ASM_MONT -DOPENSSL_BN_ASM_MONT5 -DOPENSSL_BN_ASM_GF2m -DSHA1_ASM -DSHA256_ASM -DSHA512_ASM -DRC4_ASM -DMD5_ASM -DAES_ASM -DVPAES_ASM -DBSAES_ASM -DGHASH_ASM -DECP_NISTZ256_ASM -DPADLOCK_ASM -DPOLY1305_ASM -DOPENSSLDIR="\"/usr/lib/ssl\"" -DENGINESDIR="\"/usr/lib/x86_64-linux-gnu/engines-1.1\"" The 'numbers' are in 1000s of bytes per second processed. type 16 bytes 64 bytes 256 bytes 1024 bytes 8192 bytes 16384 bytes aes-256-cbc 67860.92k 115694.72k 143112.79k 156162.05k 160014.34k 159350.78k

New bios v4.9.0.2

aes-128-cbc speed test

root@debian:~# openssl speed -elapsed -evp aes-128-cbc You have chosen to measure elapsed time instead of user CPU time. Doing aes-128-cbc for 3s on 16 size blocks: 17630245 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 64 size blocks: 8256436 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 256 size blocks: 2779482 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 1024 size blocks: 781873 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 8192 size blocks: 101212 aes-128-cbc's in 3.00s Doing aes-128-cbc for 3s on 16384 size blocks: 50068 aes-128-cbc's in 3.00s OpenSSL 1.1.0f 25 May 2017 built on: reproducible build, date unspecified options:bn(64,64) rc4(8x,int) des(int) aes(partial) blowfish(ptr) compiler: gcc -DDSO_DLFCN -DHAVE_DLFCN_H -DNDEBUG -DOPENSSL_THREADS -DOPENSSL_NO_STATIC_ENGINE -DOPENSSL_PIC -DOPENSSL_IA32_SSE2 -DOPENSSL_BN_ASM_MONT -DOPENSSL_BN_ASM_MONT5 -DOPENSSL_BN_ASM_GF2m -DSHA1_ASM -DSHA256_ASM -DSHA512_ASM -DRC4_ASM -DMD5_ASM -DAES_ASM -DVPAES_ASM -DBSAES_ASM -DGHASH_ASM -DECP_NISTZ256_ASM -DPADLOCK_ASM -DPOLY1305_ASM -DOPENSSLDIR="\"/usr/lib/ssl\"" -DENGINESDIR="\"/usr/lib/x86_64-linux-gnu/engines-1.1\"" The 'numbers' are in 1000s of bytes per second processed. type 16 bytes 64 bytes 256 bytes 1024 bytes 8192 bytes 16384 bytes aes-128-cbc 94027.97k 176137.30k 237182.46k 266879.32k 276376.23k 273438.04k

Performance improved by ~20%, independant of the block size. This should translate to roughly 20% more throughput on OpenVPN when using aes-128-cbc

aes-256-cbc speed test

root@debian:~# openssl speed -elapsed -evp aes-256-cbc You have chosen to measure elapsed time instead of user CPU time. Doing aes-256-cbc for 3s on 16 size blocks: 15604939 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 64 size blocks: 6746434 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 256 size blocks: 2104621 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 1024 size blocks: 574371 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 8192 size blocks: 73630 aes-256-cbc's in 3.00s Doing aes-256-cbc for 3s on 16384 size blocks: 36881 aes-256-cbc's in 3.00s OpenSSL 1.1.0f 25 May 2017 built on: reproducible build, date unspecified options:bn(64,64) rc4(8x,int) des(int) aes(partial) blowfish(ptr) compiler: gcc -DDSO_DLFCN -DHAVE_DLFCN_H -DNDEBUG -DOPENSSL_THREADS -DOPENSSL_NO_STATIC_ENGINE -DOPENSSL_PIC -DOPENSSL_IA32_SSE2 -DOPENSSL_BN_ASM_MONT -DOPENSSL_BN_ASM_MONT5 -DOPENSSL_BN_ASM_GF2m -DSHA1_ASM -DSHA256_ASM -DSHA512_ASM -DRC4_ASM -DMD5_ASM -DAES_ASM -DVPAES_ASM -DBSAES_ASM -DGHASH_ASM -DECP_NISTZ256_ASM -DPADLOCK_ASM -DPOLY1305_ASM -DOPENSSLDIR="\"/usr/lib/ssl\"" -DENGINESDIR="\"/usr/lib/x86_64-linux-gnu/engines-1.1\"" The 'numbers' are in 1000s of bytes per second processed. type 16 bytes 64 bytes 256 bytes 1024 bytes 8192 bytes 16384 bytes aes-256-cbc 83226.34k 143923.93k 179594.33k 196051.97k 201058.99k 201419.43k

Same 20% performance improvement is observed on aes-256-cbc.

Conclusion

The new BIOS (v4.9.0.2) is noticeably improving CPU performance. It looks like the CPU boost is working, however, it's hard to say what the actual CPU frequency is during the boost since the regular tools (cpufreq, powerd) are not able to detect it.

AMD claims that this CPU can boost up to 1400Mhz, which should translate to roughly 40% performance improvement. In practice, we see a little less.

When using the new BIOS, a single-core boost is helping with routing performance when using 1 network connection. The improvement is about 10% (from 750Mbit/s to 840Mbit/s). This is great, but it's not the 40% we were hoping for.

When using more than one network connection, APU is able to route at 1 Gbit/s on pfSense regardless of the BIOS version.

CPU stress test showed a very significant 25% improvement. CPU-heavy applications, such as snort, or other IDS, IPS applications should see a significant performance improvement.

Crypto performance improved by 20%, this should translate to roughly 20% faster OpenVPN.

You should upgrade your BIOS to v4.9.0.2 or later :-)